TECHNOLOGIES

INDUSTRIES

Need assistance? Talk to the expert

MageCloud Customers

Discover how ecommerce businesses worldwide use MageCloud to power their success.

What’s the problem with my store? What if common technical SEO issues?

Many eCommerce websites don’t take SEO seriously and focus mostly on PPC and social media ads to promote their sites. eCommerce SEO is not easy, and it may take a lot of time and effort to set up. But if set up properly, it will bring you quality traffic that converts.

To audit your site, you should look at on-page SEO, off-page SEO, and technical SEO.

But don’t start your efforts with the first two. Technical SEO is one of the most crucial parts of your strategy, and you have to focus on it first. Technical SEO is the first step in creating a better search experience. Other SEO projects should come after you’ve guaranteed your site has proper usability.

At MageCloud, while doing hundreds of Magento site audits over the years, we usually come across the same common technical SEO issues over and over again.

Here we share a few tips on what to start out. Hopefully, our technical SEO checklist will help you to find the SEO errors that hurt your Magento online store.

Be honest, when’s the last time you checked that everything was working right and you were getting the right results in terms of traffic and online authority?

I believe it’s definitely high time to take another look.

Technical SEO Checklist:

#2 Site Isn’t Indexed Correctly

#4 Missing or Incorrect Robots.txt

#9 Not Enough Use of Structured Data

#13 Mobile Device Optimization

#15 Missing or Non-Optimized Meta Descriptions and H1 tag

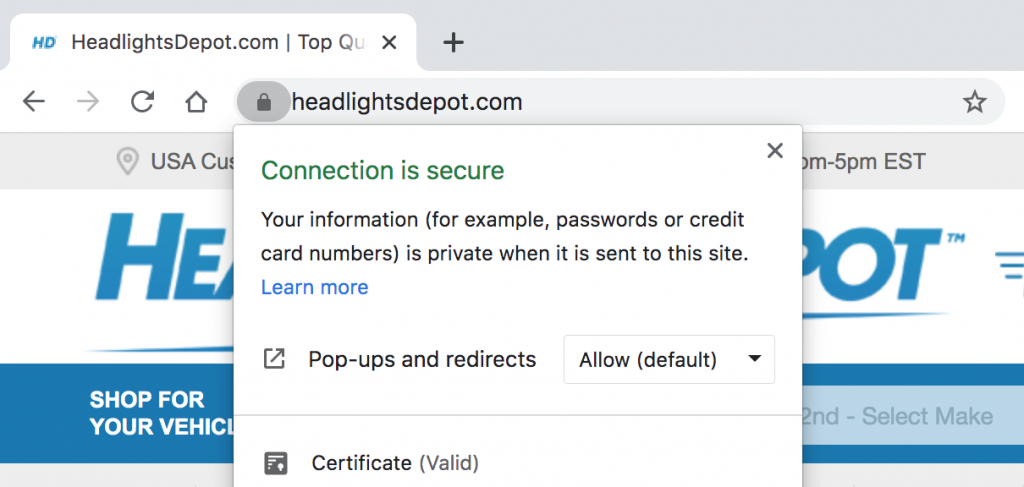

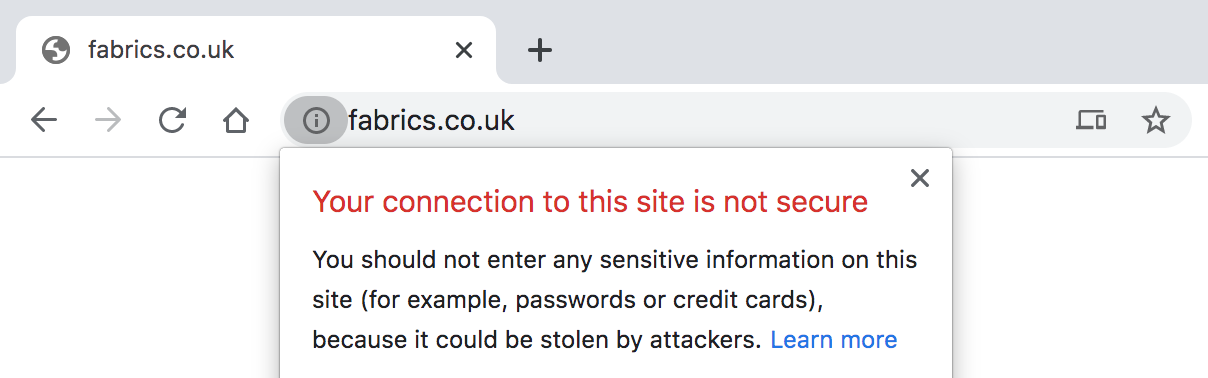

Security has always been “a top priority” for Google. In 2014 the search engine giant announced HTTPS as a ranking signal. Since October 2017, Google is showing “Not secure” caution signs in Chrome every time a web user lands on an HTTP site.

The first step for this quick fix is to check if your site is HTTPS.

How to Fix ?️

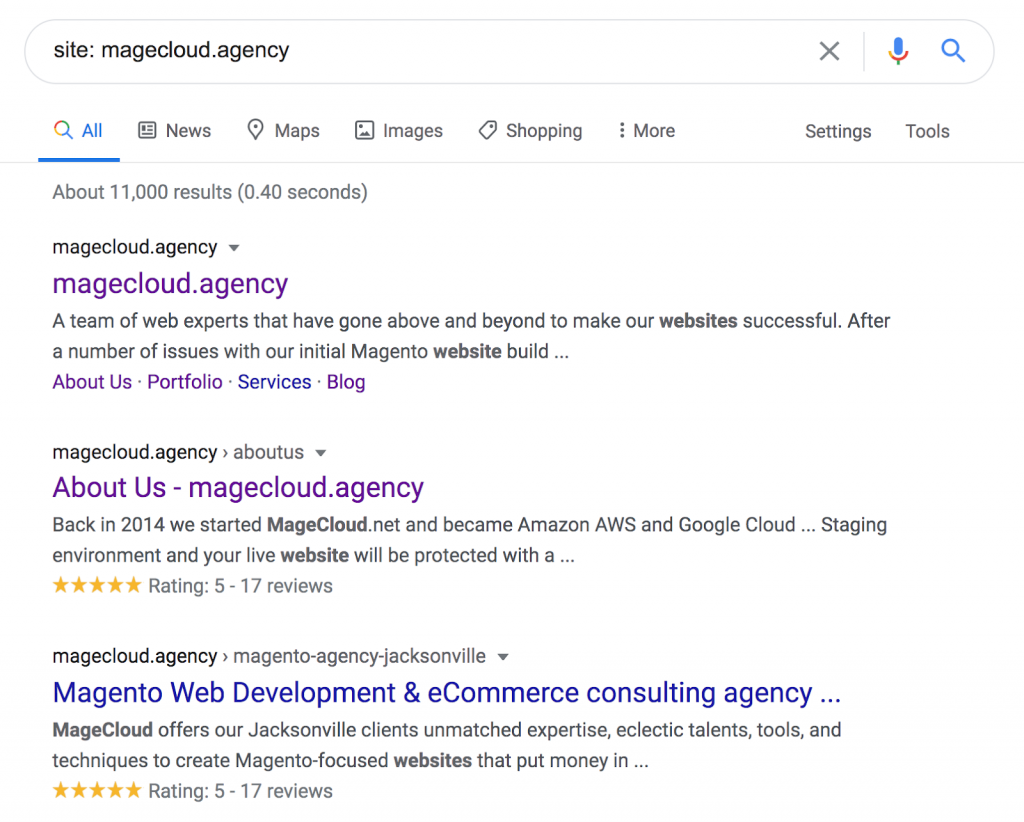

When you search for your brand name in Google, does your website show up in the search results? If the answer is no, there might be an issue with your indexation. As far as Google is concerned, if your pages aren’t indexed, they don’t exist — and they certainly won’t be found on the search engines.

How to Check ?

How to Fix ?️

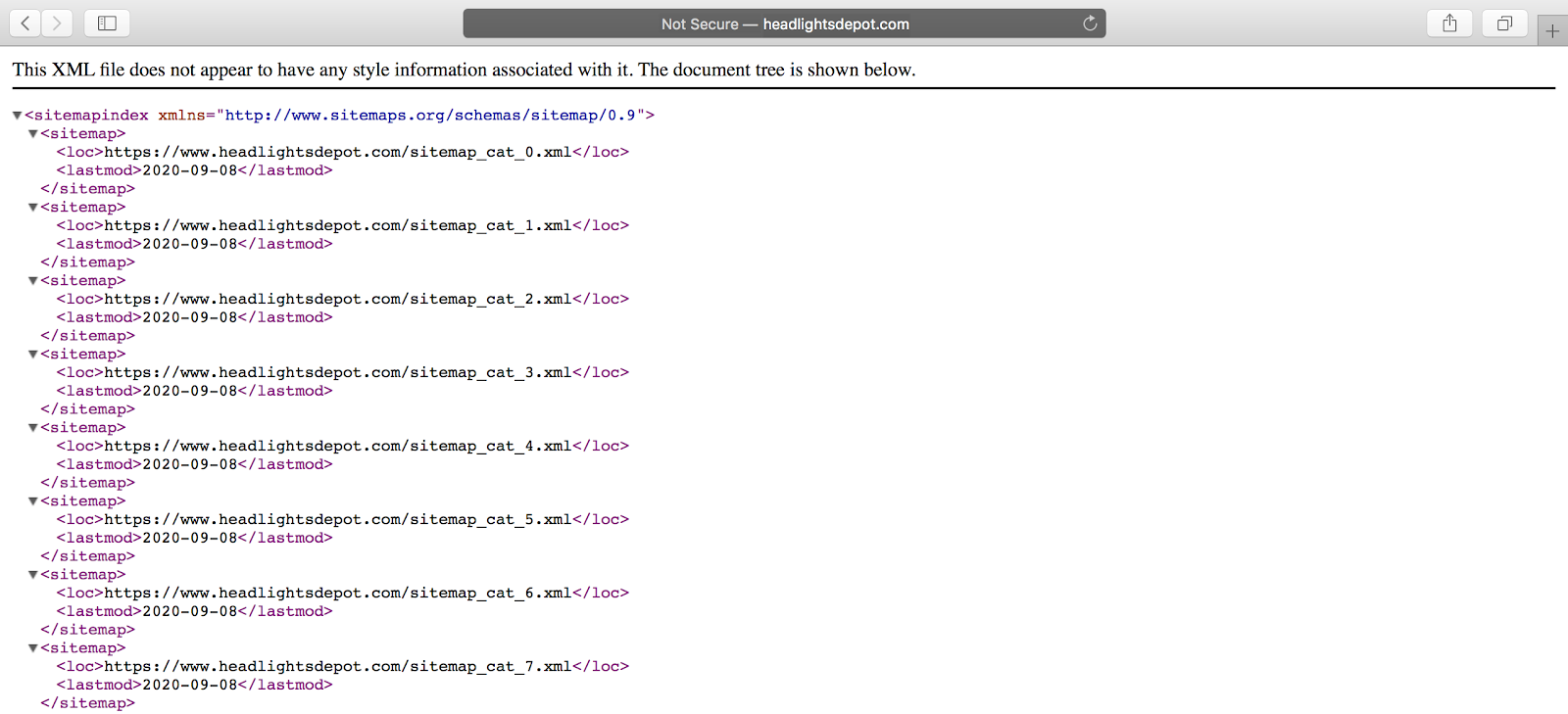

XML sitemaps help Google search bots understand more about your site pages, so they can effectively and intelligently crawl your site.

How to Check ?

How to Fix ?️

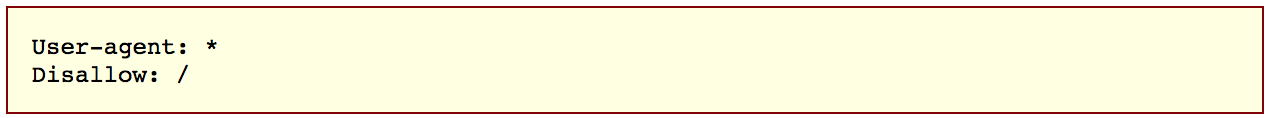

A missing robots.txt file is a big red flag — but did you also know that an improperly configured robots.txt file destroys your organic site traffic?

How to Check ?

How to Fix ?️

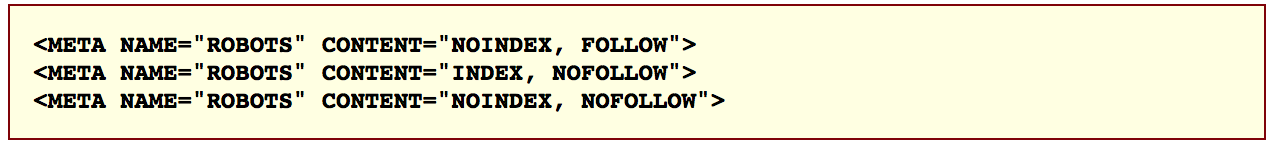

NOINDEX can be even more damaging than a misconfigured robots.txt at times.

Most commonly, the NOINDEX is set up when a website is in its development phase, but once the website goes live, it’s imperative to remove the NOINDEX tag.

Since so many web development projects are running behind schedule and pushed to live at the last hour, this is where the mistake can happen. Do not blindly trust that it was removed, as the results will destroy your site’s search engine visibility.

When the NOINDEX tag is appropriately configured, it signifies certain pages are of lesser importance to search bots. However, when configured incorrectly, NOINDEX can immensely damage your search visibility by removing all pages with a specific configuration from Google’s index.

How to Check ?

How to Fix ?️

Remember when you discovered that “yourwebsite.com” and “www.yourwebsite.com” go to the same place? While this is convenient, it also means Google may be indexing multiple URL versions, diluting your site’s visibility in search.

Every site should have the following two properties setup in Search Console:

If your website has an SSL certificate setup, such as an eCommerce website, you would also add two additional properties:

That brings our total to four site versions, all being treated differently! The average user doesn’t really care if your home page shows up as all of these separately, but the search engines do.

If Search Console is successfully set up for your website (preferred site version is set), you should begin to only see one of the site versions getting all the attention.

Setting the preferred site version in Google Search Console is a good first step in addressing the numerous versions of your site, but it’s more than just selecting how you want Google to index your website. Your preferred site version affects every reference of your website’s URL – both on and off the site.

How to Check ?

How to Fix ?️

Rel=canonical is particularly important for all sites with duplicate or very similar content (especially e-commerce sites).

Dynamically rendered pages (like a category page of blog posts or products) can look like duplicate content to Google search bots. The rel=canonical tag tells search engines which “original” page is of primary importance (hence: canonical) — similar to URL canonicalization.

How to Fix ?️

With more and more brands using dynamically created websites, content management systems, and practicing global SEO, the problem of duplicate content plagues many websites.

The problem with duplicate content is that it may “confuse” search engine crawlers and prevent the correct content from being served to your target audience.

Usually, duplicate content can occur for many reasons:

How to Check ?

How to Fix ?️

“>Each of the issues mentioned above can be resolved respectively with:

Google’s support page offers other ideas to help limit duplicate content including using 301 redirects, top-level domains, and limiting boilerplate content.

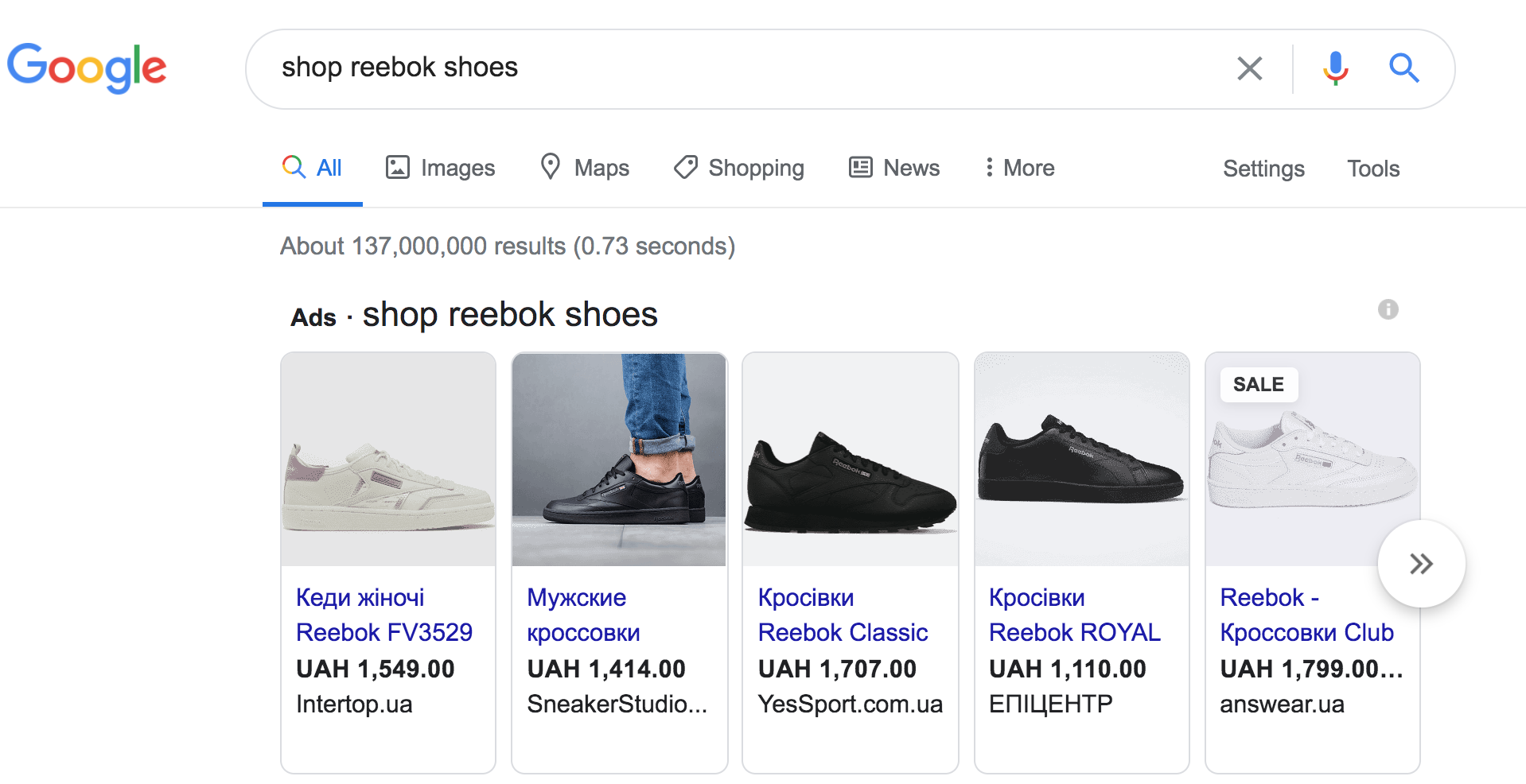

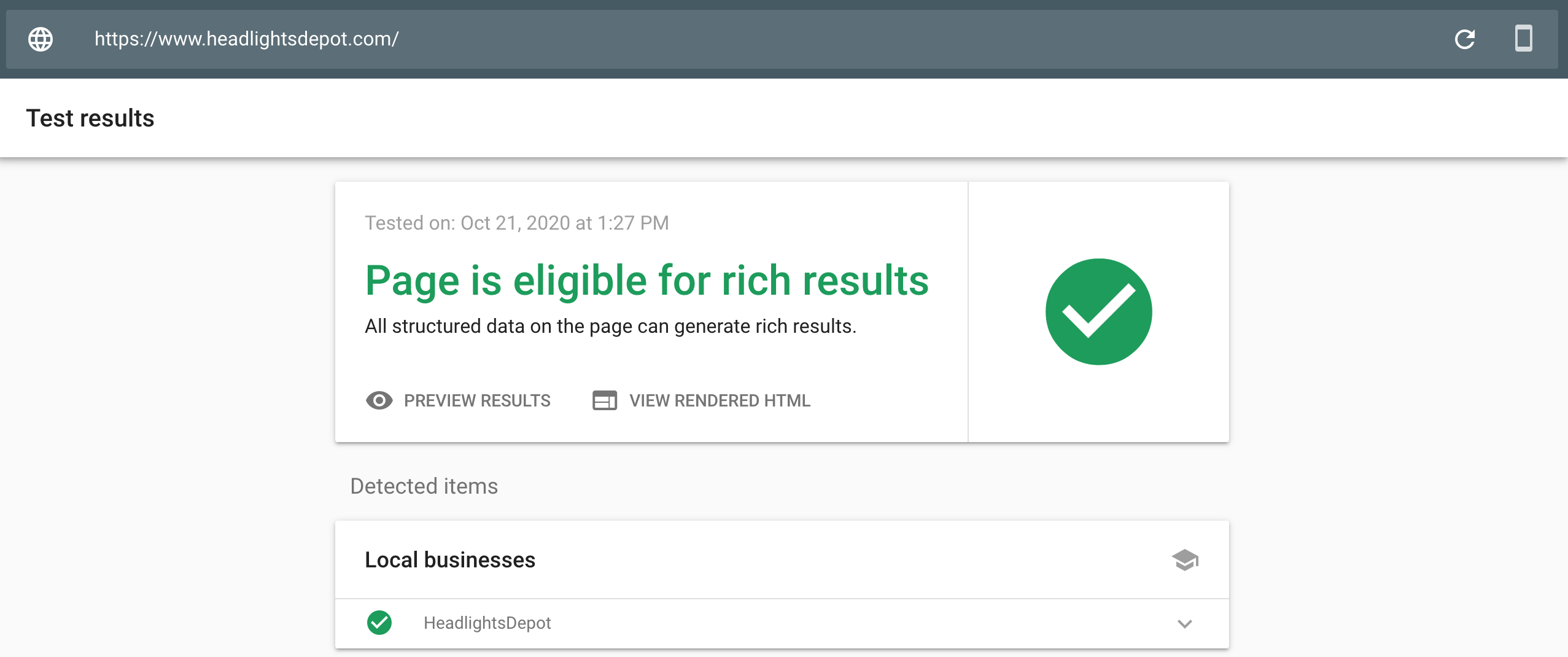

Google defines structured data as: “a standardized format for providing information about a page and classifying the page content…”

Structured data is a simple way to help Google search crawlers understand the content and data on a page. For example, if your page contains a recipe, an ingredient list would be an ideal type of content to feature in a structured data format. Address information like this example from Google is another type of data perfect for a structured data format.

How to Check ?

How to Fix ?️

Good internal and external links show both users and search crawlers that you have high-quality content. Over time, content changes, and once-great links break. Broken links create a poor user experience and reflect lower-quality content, a factor that can affect page ranking.

A website migration or relaunch project can spew out countless broken backlinks from other websites. Some of the top pages on your site may have become 404 pages after a migration.

How to Check ?

How to Fix ?️

Redirects are an amazing tool in an SEO’s arsenal for managing and controlling dead pages, for consolidating multiple pages, and for making website migrations work without a hitch.

301 redirects are permanent and 302 redirects are temporary. The best practice is to always use 301 redirects when permanently redirecting a page.

301 redirects can be confusing for those new to SEO trying to properly use them. They’re a lifesaver when used properly, but a pain when you have no idea what to with them.

How to Check ?

How to Fix ?️

If your site doesn’t load quickly, your users will go elsewhere. Site speed – and other usability factors – matters to the user experience and to Google.

How to Check ?

How to Fix ?️

In December 2018, Google announced mobile-first indexing represented more than half of the websites appearing in search results. Google would have sent you an email when (or if) your site was transitioned. If you’re not sure if your site has undergone the transition, you can also use Google URL Inspection Tool.

Whether Google has transitioned you to mobile-first indexing yet or not, you need to guarantee your site is mobile friendly to ensure exceptional mobile user experience. Anyone using responsive website design is probably in good shape. If you run a “.m” mobile site, you need to make sure you have the right implementation on your m-dot site so you don’t lose your search visibility in a mobile-first world.

How to Fix ?️

As your mobile site will be the one indexed, you’ll need to do the following for all “.m” web pages:

Broken images and those missing alt tags is a missed SEO opportunity. The image alt tag attribute helps search engines index a page by telling the bot what the image is all about. It’s a simple way to boost the SEO value of your page via the image content that enhances your user experience.

How to Fix ?️

Meta descriptions are those short, up to 160-character content blurbs that describe what the web page is about. These little snippets help the search engines index your page, and a well-written meta description can stimulate audience interest in the page.

It’s a simple SEO feature, but a lot of pages are missing this important content. You might not see this content on your page, but it’s an important feature that helps the user know if they want to click on your result or not. Like your page content, meta descriptions should be optimized to match what the user will read on the page, so try to include relevant keywords in the copy.

And although H1 tags are not as important as they once were, it’s still an on-site SEO best practice to prominently display.

This is actually most important for large sites with many, many pages such as massive eCommerce sites. It’s most important for these sites because they can realistically rank their product or category pages with just a simple keyword-targeted main headline and a string of text.

How to Fix ?️

An SEO audit reveals a complete overview of site health and optimization efforts.

A website SEO audit is like a health check for your site. It allows you to check how your web pages appear in search results, so you can find and fix any weaknesses.